Background Paper No. 31

BY Sarah BOX

I. INTRODUCTION

Artificial intelligence (AI) is a technology high on policy agendas around the globe. AI promises economic benefits, including productivity improvements, innovative break-throughs, scientific discovery, and more effective public services. Many also look to this technology to unlock solutions to global challenges, including growing healthcare needs and climate adaptation. At the same time, the development and application of AI presents challenges to cybersecurity, privacy, intellectual property rights, democratic processes, and national security, and is fueling geopolitical rivalry.

The United States has been one of the countries at the forefront of AI technology development, with a strong presence at all levels of the AI ecosystem. The United States lays claim to major AI developers such as OpenAI and Anthropic, and in 2023 outstripped China, the European Union, and the United Kingdom as the leading source of top AI models. Amazon Web Services and Microsoft are world leaders in cloud services and data centers. U.S. firms also lead in advanced logic chip design (Intel, NVIDIA, and AMD) and semiconductor manufacturing equipment (KLA, LAM, and Applied Materials). Private AI investments in the United States reached $67.2 billion in 2023, nearly 8.7 times more than China and a similar distance ahead of the European Union and United Kingdom. The United States has also played a strong role in international discussions of AI governance, including at the Organisation for Economic Co-operation and Development (OECD) and United Nations.

However, as the new Trump administration begins its term, there are critical questions about how to maintain AI innovation and competitiveness while also addressing issues of AI safety, international AI governance, and appropriate domestic regulatory settings. Early 2025 saw China’s DeepSeek issue a clear challenge to U.S. AI dominance, with its ChatGPT-like AI model trained at a fraction of the cost of similar models by OpenAI, Meta, and Google. The United States also faces domestic challenges, including providing sufficient energy and talent flows to the burgeoning AI sector.

This assessment considers how the Trump team may pick up where it left off in early 2021 and identifies key AI policy areas to watch as the U.S. executive branch, Congress, and state governments seek to advance their AI goals. The global dimensions of the AI ecosystem and the international reach of U.S. tech firms means the United States’ AI policy choices will impact consumers, businesses, and governments around the world.

II. A RUNNING START – EXISTING AI POLICY FOUNDATIONS

Until relatively recently, AI policy in the United States was largely technology and capability focused. Federal funding of research and talent played a central role in advancing computing, with support for AI research over the 1970s, 80s, and 90s coming largely from the Defense Advanced Research Projects Agency (DARPA), the National Science Foundation (NSF), and the Office of Naval Research. This support provided access to and infrastructure for high performance computing, underpinned fundamental research in areas such as speech recognition, and boosted applied research. In 1991, a Networking and Information Technology Research and Development (NITRD) program was created, which continues today and coordinates the activities of multiple agencies that invest in information technology (IT) research and development (R&D). These foundational policy choices remain relevant today, with NITRD’s National Coordination Office now working with the Office of Science and Technology Policy (OSTP) to gather public input for a new AI Action Plan to be delivered by mid-2025.

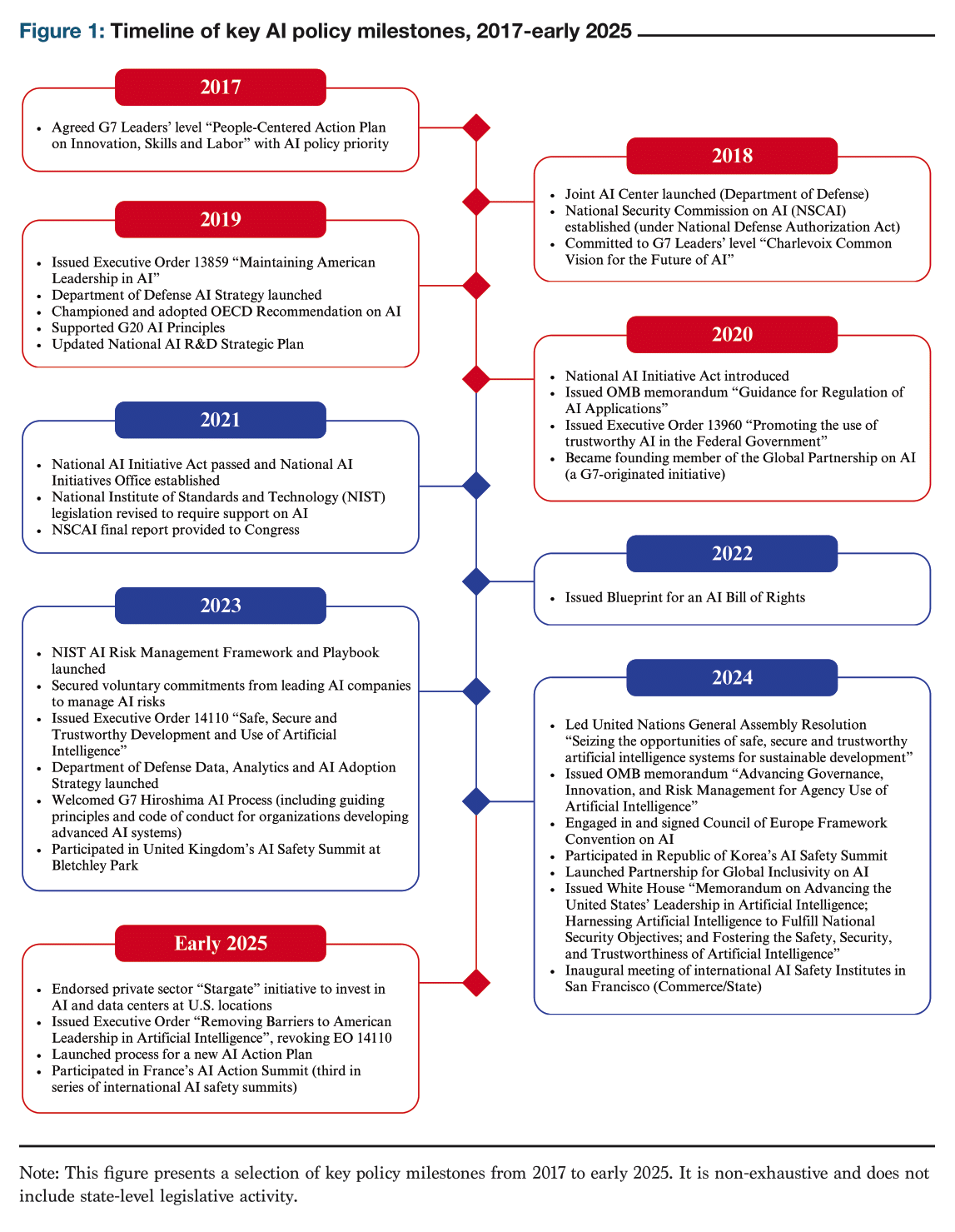

Rapid developments in AI technology and a clearer sense of its practical applications have given fresh urgency to AI policy making, including issues of AI governance, since the first Trump administration (see Figure 1 for a high-level timeline). From January 2017 to January 2021, considerable groundwork was laid, with the Trump team’s suite of policies retaining a strong focus on innovation and capability development. Establishing AI principles and guidance was also emerging as a topic internationally and the United States was active in this debate – including championing the 2019 OECD AI Principles, joining the Global Partnership on AI in 2020, and supporting discussions on AI at the G20.

The pace was especially brisk in 2019-2020. In February 2019, President Trump signed an executive order titled, “Maintaining American Leadership in Artificial Intelligence.” This elaborated an American AI Initiative to “sustain and enhance the scientific, technological, and economic leadership position of the United States in AI R&D and deployment.” The executive order was grounded in realizing the opportunities of AI and identified several areas of focus to strengthen American AI capabilities as well as foster public trust, notably looking to prioritize federal AI R&D investment, unleash AI computing and data resources for the AI research community, and build America’s AI workforce. The intent of the executive order was given effect in the bipartisan National AI Initiative Act

2020, one outcome of which was the creation of 25 AI institutes based in universities across the country, working on different aspects of AI research – from agriculture and environmental science to adult learning, edge networks and organic synthesis – and helping boost the AI talent pipeline.

The 2020 Office of Management and Budget (OMB) memo to agencies to inform development of their regulatory and non-regulatory approaches to sectors empowered or enabled by AI is also worth noting for its potential insights into AI regulation under the second Trump administration. The memo focused on reducing barriers to AI use and included principles for regulation that are tightly aligned with common “good regulatory practice” principles (such as leveraging scientific and technical information, and pursuing flexible and technology-neutral approaches). The directive explicitly stated that agencies, “must avoid a precautionary approach that holds AI systems to an impossibly high standard such that society cannot enjoy their benefits and that could undermine America’s position as the global leader in AI innovation.”

This period also saw the origins of the influential AI Risk Management Framework (RMF) developed by the National Institute of Standards and Technology (NIST). In early 2021, Congress amended NIST’s governing act, ordering development of a voluntary risk management framework; the resulting RMF became a key pillar of the U.S. approach to AI governance as well as a touchstone for other countries developing similar tools.

The Biden administration similarly increased the pace on AI policy over time, in this case spurred by the launch of ChatGPT by OpenAI in late 2022. This was the moment when AI truly entered public consciousness, and when concerns about AI risks came to the fore, including more “existential” worries about the capabilities of AI and what this might mean for human agency and control.

The defining policy of the Biden AI era was an executive order on, “Safe, Secure and Trustworthy Development and Use of Artificial Intelligence,” in October 2023. Its opening sentence – “AI holds extraordinary potential for both promise and peril,” – clearly marked the difference in sentiment to the first Trump administration. While there was a shared interest in investment in skills and innovation, increasing public sector capability and protecting civil liberties, the Biden executive order went further in launching work (and in some cases, requirements on companies) around the perceived risks of AI. The Biden administration leaned into international efforts on AI safety, creating an AI Safety Institute inside NIST tasked with a variety of actions, including AI model-testing related to national security and developing benchmarks to assess AI model capabilities. The Biden administration also struck voluntary commitments with firms, including the mid-2023 White House convening of seven leading AI companies that announced commitments aimed at maintaining Americans’ rights and safety. The breadth of activity emerging from the executive order underlines the extent of the potential impacts of shifting priorities under the new president.

III. KEY QUESTIONS FOR AI POLICY UNDER THE SECOND TRUMP ADMINISTRATION

Even before the presidential inauguration, the new Trump team was gearing up for decisive AI policy actions. Since late 2024, key members of an AI team have been announced, steps are underway to walk back AI policies inconsistent with the administration’s vision, and clear messages have been sent to the international community about the United States’ position on AI.

In December 2024, President-elect Trump announced David Sacks, a venture investor, as the White House AI and Crypto Czar. Then followed the nominations of Michael Kratsios as Director of OSTP and Assistant to the President for Science and Technology, and Lynne Parker as Executive Director of the President’s Council of Advisors for Science and Technology and counselor to the OSTP Director. Both figures played critical roles in the first administration, including on the international stage, and partner countries will likely be hoping the continuity implied by these announcements signals U.S. openness to ongoing international engagement on AI.

In January 2025, in line with his “Agenda 47” manifesto commitments, President Trump revoked President Biden’s AI executive order and ordered agencies to identify and rescind any actions taken under that order that hamper U.S. leadership in AI. The Paris AI Action Summit in February 2025 then provided an early opportunity for the Trump administration to communicate its position on AI policy. Addressing summit participants, Vice President JD Vance underscored four tenets: that American AI technology remains the global gold standard, that excessive regulation could damage this potentially transformative industry, that AI must remain free from ideological bias, and that the Trump administration would maintain a pro-worker growth path for AI in the United States.

After this pacey start, what lies ahead for U.S. AI policies over the next four years? Several interconnected areas are worth watching in the coming months.

The Extent of “Walk Back” on Domestic AI Guardrails

Commentary in the lead up to and after the election suggested that while President Biden’s executive order on AI might be revoked, many actions were well in train or completed and could be hard to reverse (such as agency rules on AI use). Further, some commentators interpreted Agenda 47 references to, “AI development rooted in free speech and human flourishing,” as indications that Republicans do not support unbridled AI development and use, but rather focus their concerns on long-term risks over issues such as bias. Practically, the policy trajectory since 2017 shows several overlapping, bipartisan interests, particularly in building up U.S. AI capabilities, which may support continuation of certain “enabling” policies that also formed part of the Biden executive order.

Identifying the specific AI policies and directives that will be revoked by agencies will give needed clarity to all stakeholders – and clear-eyed analysis may see less change than anticipated. Biden’s order included actions to manage AI risks in critical infrastructure, attract and build AI talent, promote competition and increase resources to startups, boost AI-related education and workforce development, and steer AI discussions internationally – all goals that seem aligned to the new administration’s goal of U.S. strength and leadership in AI.

The future work of NIST, including its AI RMF and the AI Safety Institute (AISI), also needs quick and careful clarification. The RMF has become a widely accepted practical tool against which large U.S. tech firms are beginning to benchmark themselves and which has helped shaped international discussions of proportionate and nuanced approaches to AI risk management across governments and private sectors. While NIST has recently updated the agreement between the AISI consortium members to remove mention of work on issues such as content authentication (which may have been considered too close to censorship), the AISI has also been tackling issues such as responsible development and use of chemical and biological AI models, which have clear national security relevance. AISI also provides an important channel to influence international debates on AI safety and security.

The Influence of China Competition on Policy

Some commentators suggest conversations on AI will now focus more on national security and competition with China than on implementing safety standards or guardrails. Think tanks and organizations aligned to conservative viewpoints were developing policy ideas along these lines in 2024. For example, it was reported that former Trump advisors were drafting a new AI executive order that would launch large scale projects to develop military technology and to review the regulatory burden on AI firms. Firms were responding, with Scale AI, Meta, and OpenAI making large language models available for national security purposes.

The focus on competition with China may give further momentum to trade and industry-oriented policies that seek to strengthen the American AI ecosystem. In early December 2024, the Biden administration implemented a third round of export controls that limited China’s ability to access and produce chips that could advance AI in military domains or other areas of national security concern. Rules were originally introduced in 2022 by the Bureau of Industry and Security (BIS), which had noted that China could use advanced semiconductors for advanced computing to modernize its military, develop nuclear weapons, and conduct espionage. And in the last weeks of his administration, Biden issued AI Diffusion Controls that, following a period of public comment, would place limits on how many data center level GPUs countries can import based on their ability to manufacture and secure advanced GPUs to prevent smuggling and export control violations, which the Trump administration has not walked back as of April 2025.

Retaining such “tough-on-China” policies would accord with President Trump’s previous actions and would align with his willingness to use tariffs to achieve non-trade related goals. It is worth recalling, for example, that the first Trump administration banned the Chinese telecommunications equipment company Huawei from buying certain U.S. technologies without special approval and effectively barred its equipment from U.S. telecom networks on national security grounds. It is less certain that the CHIPS Act, which provides significant financial incentives for chip manufacturers to set up in the United States, will survive under the new Trump administration. However, the legislation received bipartisan support and may continue with some adjustments to how funding is spent.

The Government’s Role in Advancing the AI Energy Agenda

Estimates vary, but it is expected that data centers will account for a growing share of U.S. power usage and that additional generation and transmission capacity is urgently needed. In late 2024, Republican congressman Garrett Graves suggested the current U.S. regulatory regime cannot deliver the required boost in energy for AI and that infrastructure and regulatory agendas need to be aligned. Tech firms were taking action, some working with energy companies on nuclear options, particularly small modular reactors (which can be built faster and closer to the grid), as a way to pursue carbon-free goals while increasing their energy usage. They also voiced opinions to government on what was needed to tackle AI infrastructure challenges; OpenAI, for instance, issued a blueprint for AI infrastructure that recommended – among other things – a transmission superhighway and expedited permitting.

The Stargate Project announced by a collective of major tech firms and endorsed by President Trump in January suggests that the private sector may lead the way in tackling the AI energy question, at least for now. This initiative promises more than $500 billion of investment in AI and data centers at U.S. locations, bringing together the finance, expertise, and resources of Softbank, OpenAI, Oracle, Microsoft, NVIDIA and MGX. President Trump indicated the government would smooth the path for electricity production and a later announcement on unleashing American energy suggests new sources of energy could emerge from Alaskan natural resource development, offshore drilling, and new liquified natural gas projects.

The Effect of State-level AI Policies on AI governance

It is not clear whether public sentiment will provide a counterweight to the perceived “low regulation” direction for AI policy and what role state governments may play in filling the gap.

Surveys have indicated Americans are increasingly cautious about the growing role of AI in their lives. In late 2023, Pew Research reported 52 percent of Americans were more concerned than excited about AI in daily life – a 14-percentage point increase from 2022. Three-quarters of Democrat respondents and 59 percent of Republican respondents said they were more concerned about insufficient government regulation of chatbots than excessive regulation.

Similarly, an Ipsos poll showed over 70 percent of both Democrats and Republicans supported developing standards to test that AI systems are safe, establishing best practices for detecting AI content, and working with other nations to support responsible AI development. More than half of people - 59 percent - worried there would be too little federal oversight in the application of AI.

Individual states have increasingly been proposing AI-related laws in their jurisdictions. Perhaps the most talked-about example was California’s SB 1047 – the Safe and Secure Innovation for Frontier Artificial Intelligence Models Act. This was vetoed by California’s governor but not before it had generated widespread debate. The bill focused on large-scale AI models and included requirements on companies to meet pre-training requirements related to safety and security, a prohibition on deployment of models posing an unreasonable risk of causing or enabling critical harm, and imposition of significant financial penalties for infringement.

Future iterations of the California bill could shape AI regulation more widely in the United States, given the presence of many big AI firms in the state. Given the shift to a Republican administration, it is also worth watching the Texas Responsible AI Governance Act, set for introduction in 2025. This bill aims to regulate high-risk AI systems making consequential decisions but also sets up a regulatory sandbox and aims to ensure Texas remains a friendly environment for innovation. The path of Virginia’s new bill regulating high-risk AI (HB2094) will also be instructive, as it sets a narrower scope than similar legislation in Colorado and recognizes the NIST RMF as a basis for compliance with impact assessment and other requirements.

This said, should there be an appetite for a nation-wide approach to AI regulation, the window is now. Republicans hold the White House, Senate, and House of Representatives through at least 2025-2026, and with some level of bipartisan support for addressing the highest perceived risks of AI, a unified approach could emerge. While both the Senate and House of Representatives have had active discussions, no overarching AI governance-focused legislation has emerged. A Senate roadmap previously set out priorities, including addressing deepfakes related to election content, establishing a federal privacy law, and mitigating long term risk. In early February 2025, the House Committee on Energy and Commerce formed a Privacy Working Group, which is seeking inputs on a federal comprehensive data privacy and security framework. This could mark the first step towards a federal approach to AI.

IV. CONCLUSIONs

There is substantial interest both in the United States and abroad as to what happens next on U.S. AI policy and what impact it has on the AI ecosystem. Will American companies continue to show leadership? Will the U.S. AI ecosystem become more nationalized and harder for foreigners to engage with, whether through immigration, R&D collaboration, or trade links? Will the United States still support trustworthy AI in the international policy arena with like-minded countries? Will regulatory systems across the world become more interoperable or more fragmented? What does the picture look like for firms trying to operate across borders, and for countries who are “tech takers” from big U.S. AI firms?

America’s partners will be looking for clarity, but most importantly, looking to remain engaged in dialogue on this critical technology.

Acknowledgements

Sarah Box was the 2024 New Zealand Harkness Fellow and was hosted by Observer Research Foundation America in late 2024. This background paper reflects her personal research, analysis and views, and does not represent the position of ORF America, the New Zealand Harkness Fellowships Trust or the New Zealand Government. The author is grateful to Owen Daniels of CSET, for comments on an earlier version of this paper.

Note: Citations and references can be found in the PDF version of this paper available here.